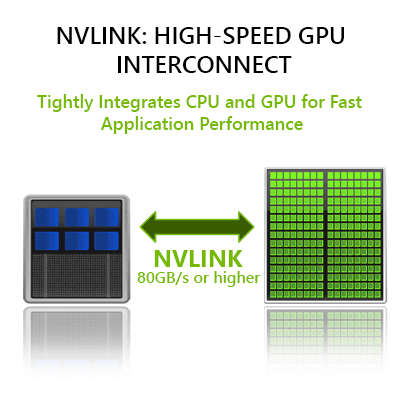

NVIDIA NVLINK

Designed for Accelerated Computing

NVIDIA® NVLink™ is a high-bandwidth, energy-efficient interconnect that enables ultra-fast communication between the CPU and GPU, and between GPUs. The technology allows data sharing at rates 5 to 10 times faster than the traditional PCIe Gen3 interconnect, resulting in dramatic speed-ups in application performance and creating a new breed of high-density, flexible servers for accelerated computing.

In addition to speeding CPU-to-GPU communications for systems with an NVLink CPU connection, NVLink can have significant performance benefit for GPU-to-GPU (peer-to-peer) communications as well.

Advantages Over PCI Express 3.0: Today's GPUs are connected to x86-based CPUs through the PCI Express (PCIe) interface, which limits the GPU's ability to access the CPU memory system and is four- to five-times slower than typical CPU memory systems. PCIe is an even greater bottleneck between the GPU and IBM POWER CPUs, which have more bandwidth than x86 CPUs. As the NVLink interface will match the bandwidth of typical CPU memory systems, it will enable GPUs to access CPU memory at its full bandwidth.

This high-bandwidth interconnect will dramatically improve accelerated software application performance. Because of memory system differences -- GPUs have fast but small memories, and CPUs have large but slow memories -- accelerated computing applications typically move data from the network or disk storage to CPU memory, and then copy the data to GPU memory before it can be crunched by the GPU. With NVLink, the data moves between the CPU memory and GPU memory at much faster speeds, making GPU-accelerated applications run much faster.