Hadoop / Big Data

Hadoop and Big Data Solution

By Category :

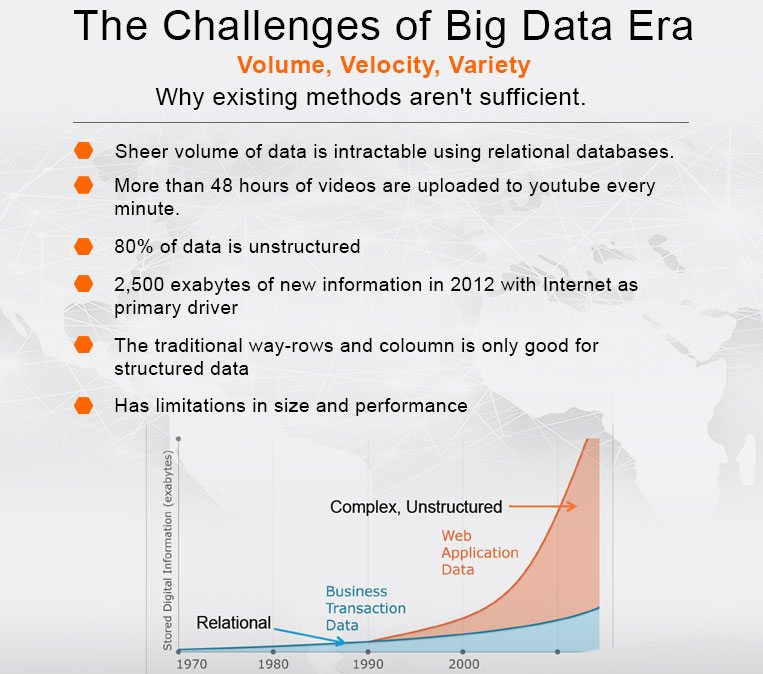

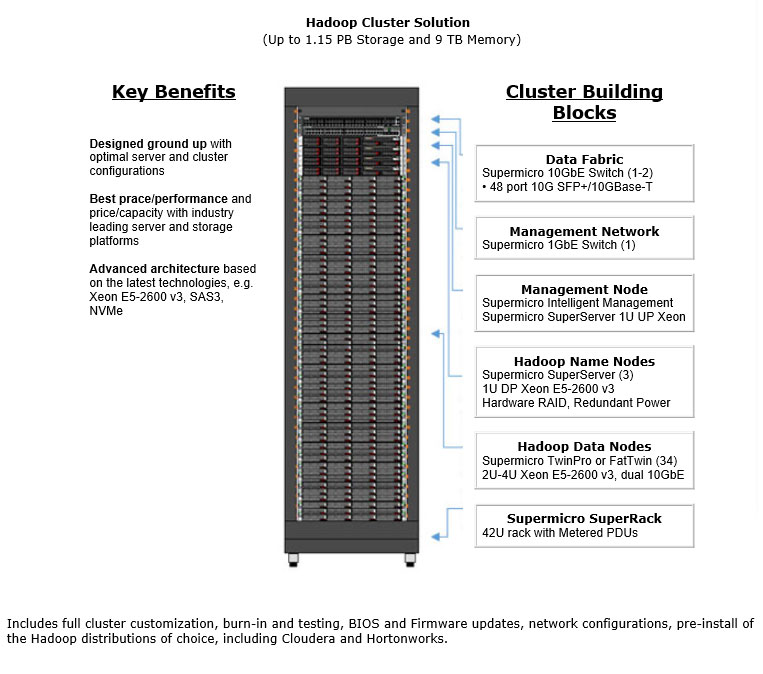

Hadoop is an open-source project administered by the Apache Software Foundation. Hadoop's contributors work for some of the world's biggest technology companies. That diverse, motivated community has produced a genuinely innovative platform for consolidating, combining and understanding data. Enterprises today collect and generate more data than ever before. Relational and data warehouse products excel at OLAP and OLTP workloads over structured data. Hadoop, however, was designed to solve a different problem: the scalable, Supermicro 42U Rack reliable storage and analysis of both structured and complex data. As a result, many enterprises deploy Hadoop alongside their legacy IT systems, allowing them to combine old and new data sets in powerful new ways.

Technically, Hadoop consists of two key services: reliable data storage using the Hadoop Distributed File System (HDFS) and high-performance parallel data processing using a technique called MapReduce. Hadoop runs on a collection of commodity, shared-nothing servers. You can add or remove servers in a Hadoop cluster at will; the system detects and compensates for hardware or system problems on any server. Hadoop, in other words, is self-healing. It can deliver data - and can run large-scale, high-performance processing jobs - in spite of system changes or failures.

Will Jaya's optimized racks, design and developed by experienced engineers is ideal for getting started with Apache Hadoop. Leveraging Supermicro's optimized servers and switches as a foundation, Will Jaya has designed two racks to get anyone started - 14U and 42U versions. Will Jaya has focused on integration, remote systems and power management to lower the deployment and commissioning timeframes to provide developers access to native hardware environments, quickly and easily. Will Jaya offer an extensive line of 1U and 2U rack mount servers optimized for Hadoop.